How AI in Cybersecurity Protects Generative AI Systems from Adversarial Attacks

Table of Contents

Introduction

These days, generative AI systems are increasingly embedded in enterprise, government, and critical infrastructure environments. While they’re best known for supporting customer interaction and software development, they’re now supplementing or even replacing legacy AI systems in areas like analysis and decision support.

Generally speaking, these systems have delivered clear productivity gains. However, they are increasingly being scrutinised for introducing a distinct and rapidly evolving attack surface that could be easily exploited by malicious actors. This is partly because, unlike traditional software, generative models can be compromised not only through infrastructure vulnerabilities but through the inputs they process, the data they learn from, and the outputs they generate.

In the real world, adversarial techniques such as prompt injection, training data poisoning, model extraction, and unauthorised data disclosure are already being observed, in increasing numbers. Notably, many of these attacks bypass conventional perimeter defences altogether, instead targeting the behaviour and decision logic of the model itself. As generative AI is rapidly deployed in higher-risk contexts, often by teams that may not yet fully understand its implications, the risks only increase.

To manage these risks, organisations must also apply next-generation AI in cybersecurity alongside their generative AI systems. Utilising current-generation artificial intelligence machine learning systems to monitor anomalies and enforce safeguards dynamically can lead to more resilient and trustworthy AI deployments. Let’s go through how this technology integration works in various contexts.

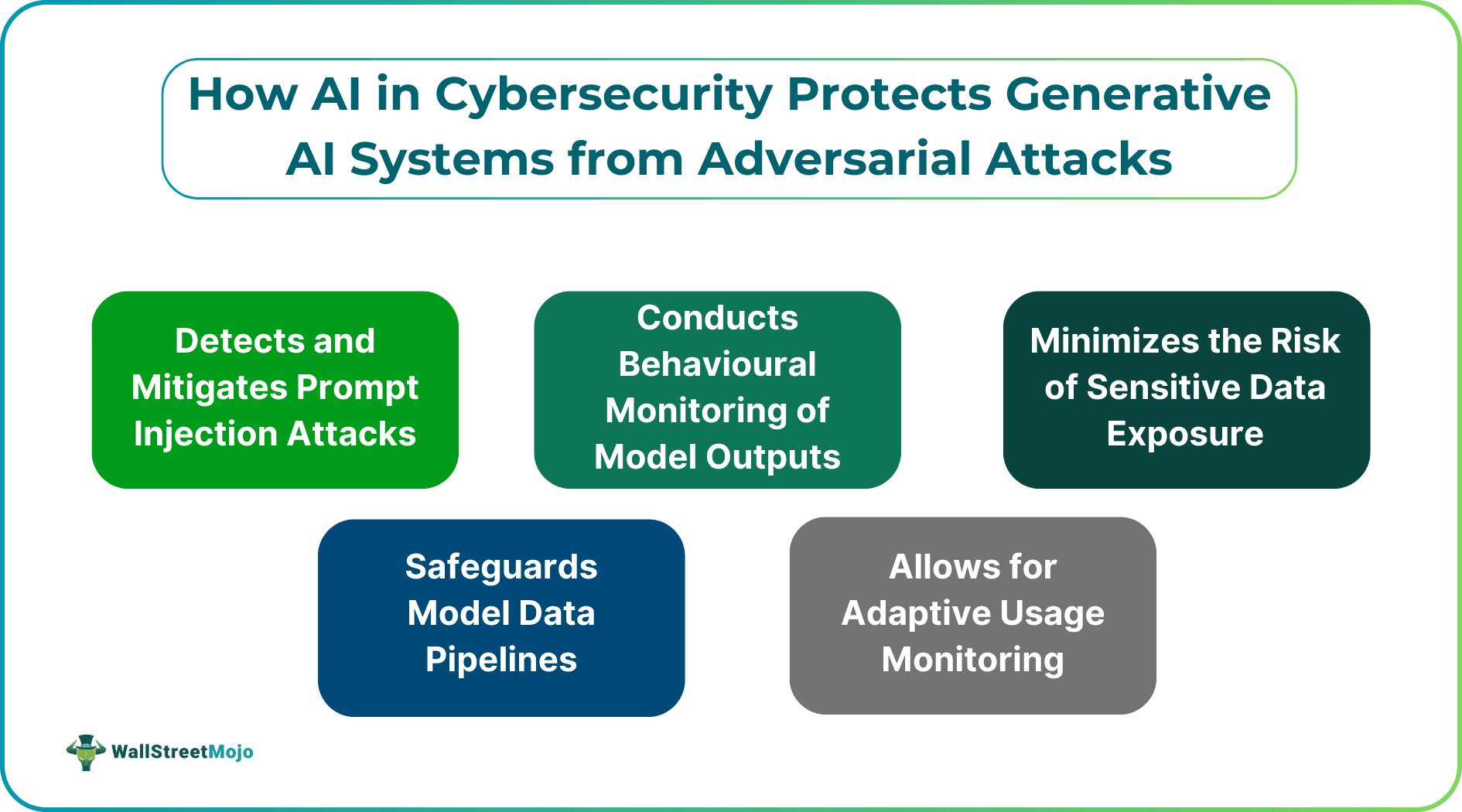

#1 - Detecting and Mitigating Prompt Injection Attacks

Prompt injection attacks exploit injected inputs that override system instructions or take advantage of contextual weaknesses. As part of LLM Security, AI-based security mechanisms can analyse prompts for abnormal structure, intent, or semantic patterns associated with manipulation, addressing the limitations of static filters. These systems can also learn from evolving attack techniques and flag high-risk inputs in real time, enabling prompts to be blocked before they influence model behaviour.

#2 - Behavioural Monitoring of Model Outputs

Not all attacks are immediately visible at the input stage. In some cases, adversaries probe models gradually, observing how their responses change over time. By systematically analysing outputs, these model extraction attacks can replicate proprietary AI models or give attackers clues to how best to attack them, later on. Over time, attackers may approximate a model’s decision logic, undermining its underlying intellectual property protections and security controls.

To defend against these attacks, continuous behavioural monitoring allows security systems to establish baselines for expected outputs across different contexts. This dynamic, behaviour-based approach helps detect novel attack patterns, enabling organisations to project a truly proactive defence posture.

#3 - Reducing the Risk of Sensitive Data Exposure

Generative AI systems must often interact with all kinds of sensitive data. Attackers may attempt to exploit model behaviour to extract sensitive information, even when access controls are in place.

AI-enabled cybersecurity tools can inspect generated outputs using natural language processing and data classification techniques. By identifying patterns associated with confidential data or policy violations, these systems can redact, suppress, or reformulate responses before they are delivered to users, reducing the risk of inadvertent data leakage. To further protect these AI workflows at the integration layer, strong API security ensures that only authorized applications and users can access model endpoints, helping prevent abuse and data exposure across connected systems.

#4 - Safeguarding Model Data Pipelines

Training data poisoning involves the injection of malicious or biased data in an attempt to make the model behave a certain way. Manual reviews alone are often too slow for countering these threats, but AI-based security controls can monitor training datasets for anomalies in real time and at scale. They can correlate data inputs with downstream model behaviour, blocking suspicious patterns early and preventing compromised data from degrading model integrity.

#5 - Adaptive Access Control and Usage Monitoring

Generative AI systems are commonly accessed via APIs or integrated applications, and these gateways can be vulnerable to abuse. Such attacks as excessive querying, automated probing, or insider misuse may not always be easily detected through static access controls.

Again, AI-driven cybersecurity enables adaptive usage monitoring by continuously assessing request patterns, volumes, and context. When abnormal behaviour is detected, systems can apply graduated responses, reducing exposure without blocking service altogether.

It’s Time You Invest in Credible Protection Against Gen AI Threats

With each passing tech cycle, the generative AI systems used by malicious actors are only becoming more capable and more widely deployed. Traditional cybersecurity approaches are no longer sufficient on their own, having mostly been conceptualised in an era when static defences often proved more than sufficient. In applying AI to cybersecurity systems themselves, organisations can gain the ability to monitor generative AI attacks continuously as well as detect the increasingly subtle forms of manipulation emerging today.

Viewed this way, securing generative AI is not simply a technical add-on as previous generations of improvements have been. Organisations that wish to protect their stakeholders must start seeing it as a foundational requirement for protecting data and systems responsibly, and at scale.