Life Science Software Development: From Data Management to AI-Driven Insights

Table of Contents

Introduction

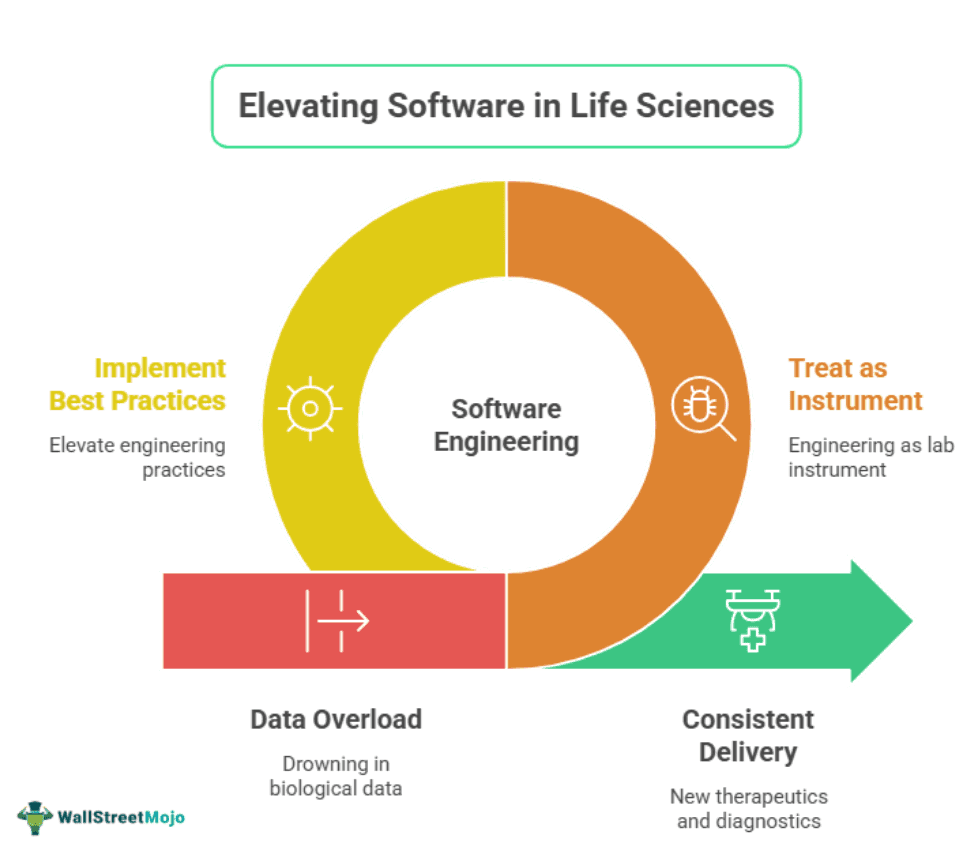

Modern biology is awash in data, high-throughput sequencing, single-cell omics, imaging at terabyte scale, real-time sensor streams from bioreactors, and clinical metadata pouring in from digital health platforms. What separates a lab drowning in this flood from one that consistently delivers new therapeutics or diagnostics is software and, increasingly, life science software development practices that elevate engineering to the level of a scientific instrument. In 2025, the life-science organizations winning patents, press, and partnerships have something in common: they treat software engineering as a first-class lab instrument, a mindset also reflected by providers like DXC Technology.

Why Software Has Become the Bottleneck in Biology

Sequencers double their output every 6-9 months, and cryo-EM microscopes now resolve structures in hours. Meanwhile, drug-discovery timelines have barely budged unless software development in life sciences is part of the core strategy. DeepMind’s AlphaFold jump-started structure prediction, but its paper model repository became genuinely useful only when developers wrapped APIs, caching layers, and visualization components around it, much like how pharmaceutical compliance software translates raw regulatory data into operationally usable workflows.

In other words, generating data is no longer the hard part; making that data searchable, trustworthy, and machine-actionable is. This shift turns bio-informatics into “bio-software-engineering,” where design patterns from fintech and big-data web firms finally meet the lab bench.

The Foundation: Robust Data Management

Good AI begins with great data plumbing. Before dreaming about generative protein models, teams must tame the basics, an area increasingly supported by life science custom software development tailored to specialized workflows. Hiring a virtual assistant into your workflow can help streamline data management without compromising the quality of your AI projects.

#1 - Capture: Electronic Lab Notebooks (ELNs) and LIMS

- ELNs as dynamic UIs. Modern ELNs (e.g., Benchling, Scibite’s DOCstore) are now essentially React front-ends backed by GraphQL APIs, enabling real-time validation of assay parameters and sample barcodes at the point of entry.

- Context-rich LIMS. Next-gen LIMS embeds ontology tagging (OBI, Cellosaurus) so that downstream ML pipelines can query “all induced pluripotent stem-cell lines treated with JAK inhibitors” without hand-written SQL.

#2 - Normalize: FAIR by Design

There is an actual operational force behind the FAIR (Findable, Accessible, Interoperable, Reusable) principles in 2025: with big pharma partners specifying FAIR compliance in collab contracts directly. This pushes data engineering teams to embed FAIR expectations directly into their ETL/ELT flows. Raw sequencing outputs, QC summaries, and cleaned read sets are all registered in the data catalog from the moment they land, with QC metrics indexed for searchability, raw files marked and tracked as primary sources, and processed artifacts exposed through controlled-access mechanisms such as signed URLs.

Rather than treating metadata as an afterthought, organizations maintain it alongside the object-store keys in versioned table formats, most commonly Apache Iceberg or Delta Lake. This guarantees schema evolution, time-travel, auditability, and consistent discoverability, which together ensure that downstream pipelines can reliably interoperate and reuse data without manual stitching.

#3 - Store: Cloud-Native Data Lakes

AWS HealthOmics, Google’s AlloyDB Omni, and Azure Purview BioMeta are go-to services for petabyte-scale stores. Two best practices have emerged:

- Partition by biological question, not file origin (e.g., “immunophenotyping” vs. “flow-cytometer-3”).

- Layered security groups for preclinical, clinical, and post-market data to respect regulatory silos without duplicating data.

Building for Scale: Modern Architecture Patterns

Once the data layer is solid, engineering attention turns to performance and reproducibility.

#1 - Microservices + Event Streams

The old monolith bioinformatics systems collapsed as soon as single-cell experiments began producing tens of thousands of BAM files per run. Teams now prefer using microservices deployed through Kubernetes, which communicate using gRPC. An event bus (Kafka or AWS EventBridge) broadcasts “new_sample_registered” or “variant_call_completed,” enabling downstream services to trigger without polling.

#2 - Containerized Pipelines

Deterministic environments are enforced by the requirements of reproducibility and GxP. Nextflow and Snakemake pipelines are distributed as OCI-compliant containers, version-pinned in an internal registry. GitOps (ArgoCD or Flux) provides consistency in the commit that is applied in dev, validation, and production clusters.

#3 - Observatory Dashboards

Prometheus scrapes both infrastructure metrics and domain-specific counters (e.g., “reads_processed_per_min”). Grafana boards unite these with experiment metadata so scientists can ask, “Did server latency or reagent batch cause yesterday’s drop in QC?”

From Pipelines to Predictions: Integrating AI

With data flowing and services scaling, AI finally has a fighting chance to add value.

#1 - Selecting the Right Model Class

- Structured relational data: Gradient-boosting or tabular transformers remain state-of-the-art for predicting fermentation yield from dozens of process variables.

- Images: Vision transformers fine-tuned on self-supervised histopathology datasets outperform CNNs in low-data regimes.

- Sequences: Protein language models (ESM-2, ProGen2) provide embeddings that accelerate docking simulations tenfold.

#2 - Feature Governance

AI misfires often trace to “ghost” columns variables that leak allocation bias or timestamp ordering. Modern MLOps stacks, like Tecton and Feast, keep track of feature lineage, which includes code snippets, data sources, and approval statuses. Regulatory auditors increasingly request this lineage.

#3 - Deployment Patterns

- In-cluster Inference. Latency-sensitive use cases (colony-picking robots) serve ONNX models via Triton servers running inside the same Kubernetes node as the camera stream.

- Batch Prediction. Overnight retrosynthetic route planning calls cloud spot instances orchestrated by Apache Airflow, pushing results to a shared Neo4j knowledge graph.

#4 - Human-in-the-Loop

AI makes suggestions; scientists decide. UIs now present “confidence ribbons” and link model outputs back to raw data snapshots so reviewers can drill down. Feedback (approve/reject) is logged and piped to active-learning queues for retraining.

Compliance and Trust: Engineering for Regulated Science

Biotech may love to “move fast and break things,” but patients and regulators are less enthusiastic.

#1 - 21 CFR Part 11 and Annex 11

Any software that touches electronic records for FDA submissions must enforce audit trails, user access control, and electronic signatures. A pattern that works:

- API Gateway enforces OAuth2.

- A central Audit Service streams immutable logs to an AWS QLDB ledger.

- Signatures require WebAuthn hardware keys, satisfying both Part 11 and EU Annex 11.

#2 - Data Privacy

Post-GDPR, data anonymization is insufficient for genomic data because re-identification risk remains high. Many firms now implement trusted research environments (TREs): isolated VPCs where de-identified data is analyzed; only aggregate statistics leave the enclave.

#3 - Model Validation

The FDA’s 2024 draft guidance on AI/ML in software as a medical device (SaMD) formalized a “Predetermined Change Control Plan.” Dev teams, therefore, ship model cards with every release declaring training data, performance bounds, and update cadence. CI pipelines automatically compare new model metrics against locked baselines; if a drift threshold is exceeded, deployment halts pending QA sign-off.

Skills and Team Structure: Bridging Wet-Lab and GitHub

Getting all of the above right is not merely a tooling issue; people and culture drive success.

- Embedded Product Trios: High-performing biotech software orgs mirror tech-industry patterns: a product manager + tech lead + domain scientist trio owns a user problem end-to-end, whether that is “clinical sample logistics” or “antibody discovery.” Meetings happen at the bench as often as on Zoom.

- Software Craft Meets Lab Etiquette: Pair programming is paired pipetting: code reviews include a checkpoint, “Will this confuse a postdoc at 2 a.m.?” Conversely, wet-lab SOPs teach devs that a 1 µL error can waste $50 000 of reagents. Cross-training yields mutual respect and fewer production incidents.

- Hiring Polyglots: The ideal hire in 2025 reads C++, writes Python, and carries a pipette license. Yet unicorns are rare. Successful orgs instead form guilds: computational biologists mentor backend engineers on ontology mapping; SREs teach tissue-culture folks the basics of bash.

Looking Ahead: Five Trends to Watch

- Generative BioFoundries. Robotic labs driven by reinforcement-learning agents will design, build, and test biochemical pathways in 24-hour cycles.

- Multi-omics Digital Twins. Predictive models will unify transcriptomic, proteomic, and metabolomic time-series into patient-specific “avatars” that forecast disease progression and therapy response.

- Post-Quantum Cryptography for Genomic Privacy. With quantum computing on the 2030 horizon, life-science firms begin migrating data-at-rest encryption to lattice-based schemes to future-proof patient data.

- Edge AI in Bioreactors. Single-use reactors contain custom ASICs that carry out on-device spectral analysis of the Raman control loops without any round-trip latency to the cloud.

- Regulation-as-Code. Frameworks like OpenGxP encode regulatory rules as machine-readable tests; CI automatically flags non-compliant pipeline updates, turning audits into diff reviews.

Conclusion

In 2025, no longer seen as a peripheral IT activity, life-science software development is central R&D, which is supported by data management, scalability provided by cloud-native architecture, data to actionable insight delivered by AI, and disciplined compliance by earning the trust of regulators and patients.

Refactoring an old LIMS, or initializing a model of protein design, or writing a validation plan of a clinical decision-support tool: it is important to keep in mind that in modern biology, software is the experiment. Construct it as seriously as you do at the bench, and the revelations will consequent.