Table Of Contents

What Is Outlier Detection?

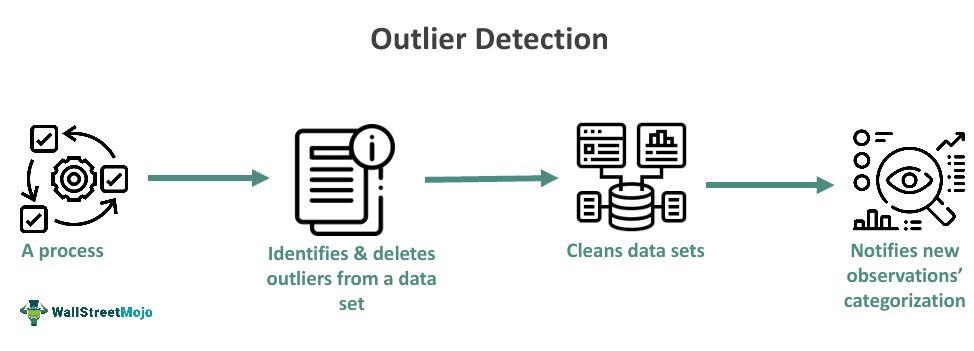

Outlier detection refers to a process that involves identifying observations far off or significantly different from others. It enables organizations and individuals to clean data sets. Moreover, it enables defined outliers to notify the categorization of the new observations as anomalies.

This process is the primary step in all tasks related to data mining. Detecting outliers in different fields, such as finance and healthcare, is extremely crucial for people. This is because the process can help one spot unusual events or behaviors that may result in substantial losses or any significant failure in the future.

Key Takeaways

- Outlier detection refers to a process of identifying data objects that diverge from the rest of observations within a specific data set.

- Individuals or any organization may conduct this process to clean a data set. Businesses carry out this process at the beginning of the data mining procedure.

- There are different outlier detection techniques used by organizations. Some of these methods are z-score and IQR methods.

- A key difference between anomaly and outlier detection is that, unlike the former, the latter helps spot rare observations within a data set.

Outlier Detection Explained

Outlier detection refers to a procedure that involves spotting and eliminating outliers from a particular data set. Note that there isn’t any fixed or standardized technique to determine outliers as they vary based on the data population or set. Hence, its detection and identification become subjective.

Outliers are samples deviating drastically from a certain data set’s average. Note that one can establish an outlier’s characteristics and simplify the identification process via continuous sampling. This process works by observing any given data set and defining different points as outliers. It has multiple applications in the analysis of data streams. Detecting outliers can help companies and people build models that deliver decent performance on test data that are unseen.

This process may play a critical role in data analysis because outliers could negatively bias an analysis’s entire result. Moreover, it is important to identify and remove these outliers to ensure that the model built is efficient.

Methods

Some popular outlier detection techniques are as follows:

- Pierce’s Criterion: In this case, one sets an error limit for an observation series. Beyond this limit, one must disregard observations as they already include significant errors.

- Dixon’s Q Test: Based on a data set’s normality, this technique involves testing for bad data. Individuals must use this method sparingly and never utilize it more than once for a data set.

- Grubb’s Test: This technique is based on an assumption that the data belong to a normal distribution. It eliminates outliers one by one.

- Chauvenet’s Criterion: It helps in determining whether outliers are inside the boundaries and a part of the data set. Moreover, it can help identify if outliers are spurious.

Some other methods are as follows:

- Z-Score Method: It measures the total number of standard deviations any data point is away with reference to a mean.

- Interquartile Range Method: This technique involves calculating the interquartile range (IQR) to detect outliers.

- Local Outlier Factor or LOF Method: It involves measuring a data point’s local density in comparison to the neighbors.

Examples

Let us look at a few outlier detection examples to understand the concept better.

Example #1

Suppose ABC, a lending institution, records all its business-related transactions using specialized software to avoid errors and improve operational efficiency. The organization’s management team noticed a significant deviation from their expected gross revenue at the end of the financial year. Suspecting a potential error or fraud, the chief executive officer instructed some data analysts within the organization to conduct an outlier detection process. The analysts carried out this procedure and detected multiple fraudulent transactions. This enabled the company to address the issue and report correct figures in their financial statements.

Example #2

The European Central Bank utilized some clustering methods along with a moving window algorithm to conduct an outlier detection process and spot the suspicious observations within financial markets time series. This method successfully enabled the organization to detect the data points that significantly deviated from the data set’s average. Moreover, this technique provided the bank with clean data concerning the financial market for daily utilization of risk management, financial stability, and monetary policy analysis.

Applications

This concept has applications in various areas, including bio-informatics, distributed systems, healthcare, cyber-security, finance, bio-informatics, and network analysis. Since both the data amount and the linkage increase in a domain range, these network-based methods are going to find more opportunities and applications for research concerning different settings.

In finance, this process can help businesses detect fraud related to insurance and credit cards. Moreover, one must note that outlier detection techniques are utilized in military surveillance to prevent attacks on the enemy.

Pros And Cons

Let us look at the benefits and limitations of this process:

Pros

- Reduces the noise while conducting analysis

- Improves data analysis accuracy

- Helps discover significant information within the data

- Its simplicity ensures very explainable results

Cons

- Requires one to have additional computational resources

- Can be sensitive to the distribution’s scale and shape

Outlier Detection vs Anomaly Detection vs Novelty Detection

Understanding novelty, anomaly, and outlier detection can be confusing for individuals new to such concepts. To understand these processes clearly, one must know their distinct characteristics. Hence, the table below highlights some key distinguishing factors.

| Outlier Detection | Anomaly Detection | Novelty Detection |

|---|---|---|

| It is a procedure one can use to spot the data points that are far away from a data set’s average. | Anomaly detection involves spotting observations occurring extremely rarely within a certain data set. The characteristics of such observations are significantly different from most of the data. | This statistical technique helps in determining unknown or new data. |

| This process helps clean data sets. | It can help spot suspicious activity that is not in line with one’s standardized behavioral patterns. | This process helps spot anomalies in data that exist only in new instances. |