Table Of Contents

What Is A Data Set?

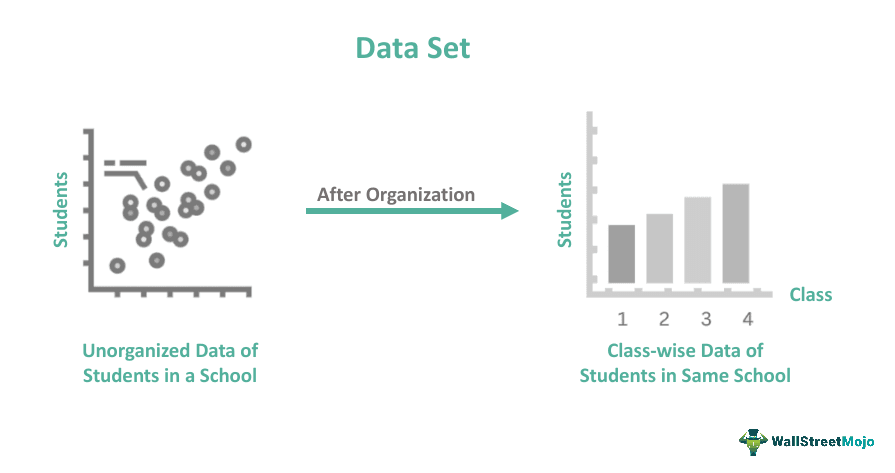

A data set, or a dataset or data collection, refers to a structured collection of data points or observations organized and stored together for analysis or processing. These are used in fields and applications, including scientific research, business analytics, and machine learning.

They provide a structured representation of real-world data and serve as the basis for conducting statistical analyses, deriving insights, and building predictive models. A data set typically consists of individual data elements, often called data points or samples, representing specific entities, events, or instances. The attributes may be numerical (e.g., age, height) or categorical (e.g., gender, color), depending on the nature of the data.

Key Takeaways

- Data sets are groups of data organized in a specific manner to make their analysis or processing easier.

- It helps to utilize and analyze data, ensure data quality, and make informed decisions based on data-driven insights.

- Well-documented data enable reproducibility, allowing others to validate or replicate findings. In addition, transparency and accountability are promoted when data are available for review and scrutiny.

- Ethical considerations should guide the collection, handling, and usage of data. Fairness, avoiding discrimination, addressing biases, and protecting individuals' privacy are essential ethical considerations.

Data Set Explained

A data set is a group of observations in a specific format. It represents data related to a particular topic or domain. It is a critical component in data analysis and machine learning but has limitations and challenges. Here are some essential aspects to consider:

- Data Quality: The data quality within a set is paramount. Inaccurate, incomplete, or biased data can lead to flawed analyses and erroneous conclusions. Data cleaning and pre-processing techniques are necessary to handle missing values, outliers, and inconsistencies. However, these processes can be time-consuming and may introduce additional biases or distortions.

- Sample Size and Representativeness: The size and representativeness of data are crucial for making valid inferences and generalizations. A small or unrepresentative sample may not accurately capture the underlying population, limiting the reliability and applicability of the findings.

- Sampling Bias: It can be prone to sampling bias. This bias can occur due to non-random sampling methods or inherent biases in data collection. Addressing and mitigating sampling bias is essential to avoid skewed results and ensure fairness in decision-making.

- Data Privacy and Ethical Concerns: It often contains sensitive and personally identifiable information. Protecting privacy and ensuring ethical use of data is crucial. Anonymization techniques and compliance with relevant data protection regulations are necessary to safeguard individuals' rights and prevent misuse or unauthorized access.

- Data Integration and Interoperability: It represents multiple sources or different formats, challenging data integration and interoperability. Harmonizing and merging disparate data sets can be complex and time-consuming, often requiring data engineering tools alongside careful consideration of data schema, variable definitions, and data transformation processes.

Properties

Some essential properties of data sets are:

- Size: The size of a data refers to the number of data points or observations it contains. It can range from small sets with a few samples to large-scale data with millions or billions of data points. The data size impacts computational requirements, storage capacity, and analysis techniques.

- Dimensions: The dimensions of a data refer to the number of variables or features associated with each data point. It represents the structure of the tabular representation, where each column corresponds to a specific attribute. The number of dimensions can vary, and high-dimensional data sets pose challenges in visualization, analysis, and modeling.

- Granularity: The granularity of data refers to the level of detail or specificity. It determines the precision and resolution of the observations. For example, a sales data set could be recorded at a daily, monthly, or yearly level, with each level of granularity providing different insights and analysis possibilities.

- Data Types: Data sets consist of data points with different data types. Common data types include numerical (e.g., integers, decimals), categorical (e.g., labels, categories), text, dates, or binary values. Understanding the data types in a data set is essential for appropriate data handling, preprocessing, and analysis.

- Structure: Data sets can have structured or unstructured formats. Structured data sets have a well-defined schema or organization, typically represented in tabular form. Unstructured data sets need a predefined structure, such as text documents, images, or social media posts. In addition, unstructured data requires specialized extraction, transformation, and analysis techniques.

Types

Some commonly recognized types of data sets are:

- Cross-Sectional Data: Cross-sectional data sets capture observations of different entities or subjects at a specific time. Each data point represents a distinct unit. For example, a cross-sectional data set may contain information about individuals' demographics, income, and education in a particular year.

- Time Series Data: Time series sets record observations over several periods, typically at regular intervals. The data points are in chronological order, allowing the analysis of trends, patterns, and seasonality. Examples include stock prices, weather measurements, or quarterly sales figures.

- Longitudinal Data: Longitudinal sets involve multiple observations or measurements taken from the same subjects or entities over some time. This type of data set captures individual-level changes, trends, and development.

- Panel Data: Panel sets combine cross-sectional and longitudinal data involving observations of multiple entities over multiple periods. It captures both individual-level and time-related variations, allowing for simultaneously analyzing individual and time effects.

- Spatial Data: Spatial sets contain geographic or spatial information associated with each data point. They capture data across different geographical locations, such as latitude, longitude, or postal codes.

- Textual Data: Textual data consist of unstructured or semi-structured textual information, such as documents, articles, emails, or social media posts. Natural Language Processing (NLP) techniques extract, preprocess, and analyze text data for tasks like sentiment analysis, topic modeling, and classification.

- Image and Video Data: Image and video data contain visual information, such as pictures or frames. They are applicable in computer vision tasks, including object recognition, image classification, and video analysis. These data sets can be significant in size and require techniques for processing and analysis.

- Relational Data: Relational data are structured using a relational database model, where data points are stored in separate tables, and relationships between tables are defined through keys.

Examples

Let us understand it better with the help of the examples:

Example #1

Suppose we are studying the performance of students in a hypothetical school. We collect data on various attributes of the students and their academic achievements. Our data set consists of 100 students, and we have the following information for each student:

- Student ID: A unique identifier for each student.

- Gender: Categorical variable indicating the student's gender (e.g., Male or Female).

- Age: Numerical variable representing the student's age in years.

- Grade: Numerical variable indicating the grade level of the student (e.g., 6th grade, 7th grade).

- Study Hours: The numerical variable represents the average number of hours the student studies daily.

- Math Score: Numerical variable representing the student's score on a math exam.

- Science Score: Numerical variable representing the student's score on a science exam.

- English Score: Numerical variable representing the student's score on an English exam.

This set captures information about each student's demographic characteristics (gender, age), academic level (grade), study habits (study hours), and academic performance (math score, science score, English score).

With this set, we can perform various analyses. For example, we could analyze the relationship between study hours and academic performance to see if there is a correlation between the two. We could also compare the performance of male and female students or examine how age and grade level affect academic achievements.

Example #2

Another example of a financial data set in the news is the "Enron Email Dataset." The Enron Corporation, an American energy company, was involved in a massive accounting scandal in the early 2000s. An extensive collection of emails and other electronic communications from Enron employees was made publicly available as part of the investigation.

The Enron Email Dataset contains approximately 500,000 emails sent and received by employees of Enron, including senior executives, over several years leading up to the company's collapse. This data set provides insight into the internal communications and operations of the company during a critical period.

The Enron Email Dataset gained significant attention from researchers, analysts, and data scientists as it offered an opportunity to study corporate misconduct, fraud, and the role of communication networks in such scandals. Researchers have utilized this data set to analyze communication patterns, identify key individuals involved in the scandal, and study organizational behavior and decision-making dynamics.

The Enron Email Dataset has been widely used in various fields, including finance, machine learning, and natural language processing. In addition, it has served as a valuable resource for studying corporate governance, detecting fraud, developing email classification algorithms, and exploring the ethical dimensions of business practices.

Advantages And Disadvantages

Some of the crucial pros and cons of the data set are as follows.

Advantages

- Information and Insights: Data sets provide a structured representation of data, allowing for the organization and analysis of information. They enable researchers, analysts, and data scientists to derive insights, identify patterns, and make informed decisions based on data analysis.

- Reproducibility and Validation: Well-documented data sets facilitate reproducibility, as others can use the same data to validate or replicate findings. This promotes transparency, accountability, and the advancement of scientific knowledge.

- Efficiency: Data sets allow for efficient data storage and retrieval. Organizing data in a structured format makes searching for specific information easier, applying data processing techniques, and performing data analysis at scale.

Disadvantages

- Data Quality and Bias: Data sets can suffer from data quality issues, including missing values, errors, or biases. Only accurate or accurate data can lead to good analyses and reliable results. Ensuring data quality and addressing biases require careful data collection and preprocessing.

- Limited Scope and Representativeness: Data sets are finite representations of real-world phenomena and may not capture the full complexity or diversity of a problem domain. Sampling methods or data collection processes can introduce limitations in terms of representativeness, potentially leading to incomplete or skewed insights.

- Data Privacy and Security Concerns: Data sets often contain sensitive and personally identifiable information. If not handled properly, data sets can pose privacy and security risks, potentially leading to unauthorized access, breaches, or data misuse. Therefore, protecting data privacy and complying with regulations is essential.

Data Set vs Database vs DataFrame

A data set is a group of data points or observations organized and structured in a specific format. A database is a collection of data collected, managed, and stored systematically. A data frame is a structure used in programming languages like Python and R, primarily in data analysis and manipulation.

Let us compare them.

#1 - Structure

A data set can have various structures, including tabular, hierarchical, or unstructured formats, depending on the nature of the data. For example, a database follows a structured layout with predefined schemas, tables, and relationships between tables. On the other hand, a DataFrame is a tabular structure with rows and columns, similar to a database table or spreadsheet.

#2 - Scope

A data set can represent a subset or a complete data collection related to a specific topic or domain. For example, a database can contain multiple tables and support storing and managing a wide range of data. On the other hand, a DataFrame is typically used to represent a subset of data for analysis or manipulation, often derived from a more significant data source.

#3 - Functionality

Data sets provide the raw data for analysis but may not offer built-in functionalities for querying, filtering, or data manipulation. Many analysts circumvent these limitations by exporting data from Salesforce to Excel where they can more easily perform custom manipulations and analyses to derive meaningful insights. Databases provide a wide range of functionalities for data storage, retrieval, querying, indexing, and managing relationships between data. DataFrames offer built-in data manipulation, filtering, aggregation, and analysis functionalities. In addition, they provide methods and operations for handling data efficiently.

#4 - Usage

Data sets are commonly used as input for analysis, modeling, or machine learning tasks. They may require preprocessing or transformation before the examination. Databases are used for persistent storage, efficient data retrieval, and as a central repository for structured data in applications and systems. DataFrames are widely used in data analysis and manipulation tasks, allowing for effortless slicing, filtering, grouping, merging, and transforming data.